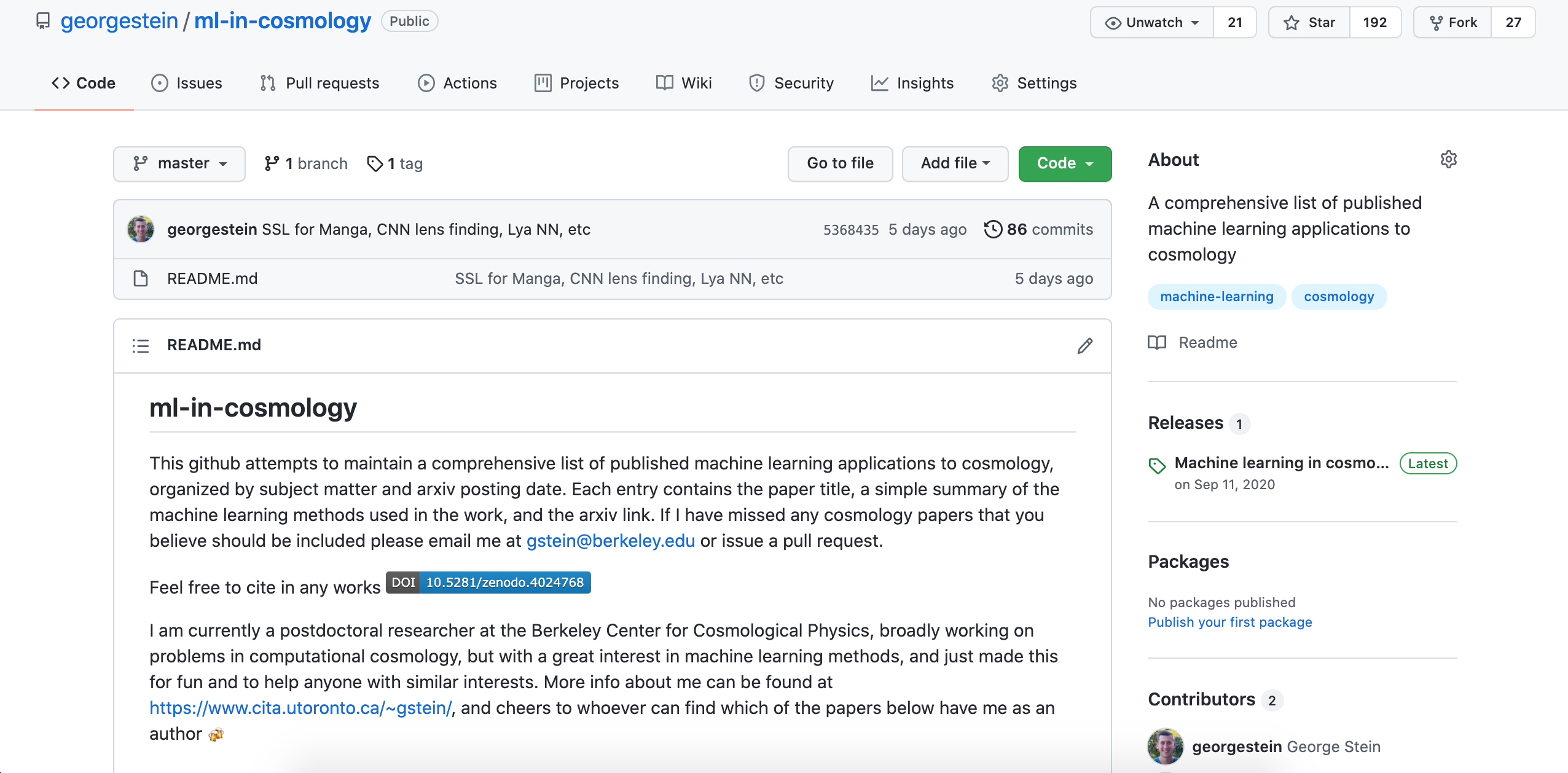

Machine learning in cosmology

The rapid pace of machine learning is difficult to keep on-top of, yet recognizing trends and analysing state-of-the-art methods is essential to achieve the best results for your problem.

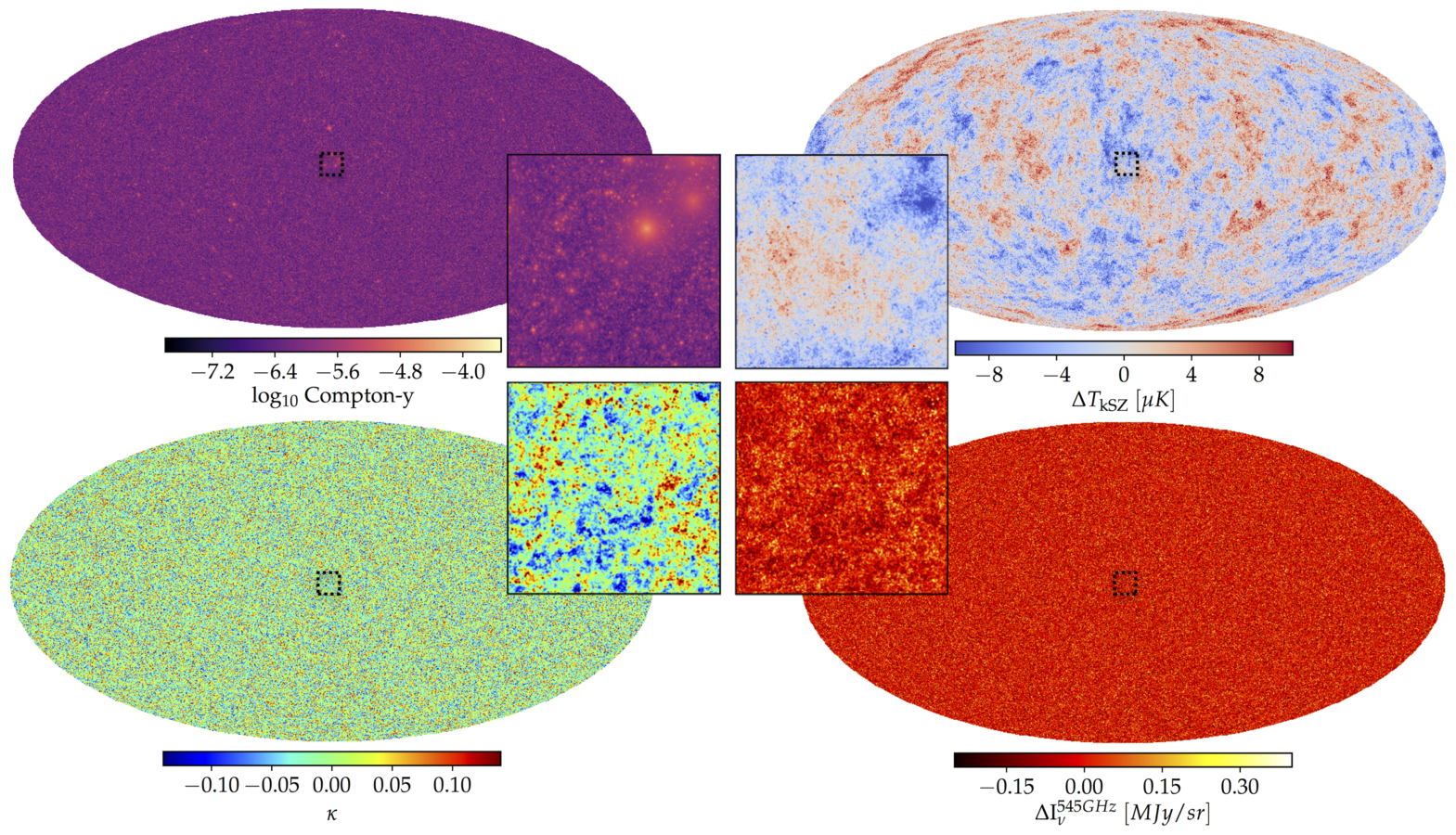

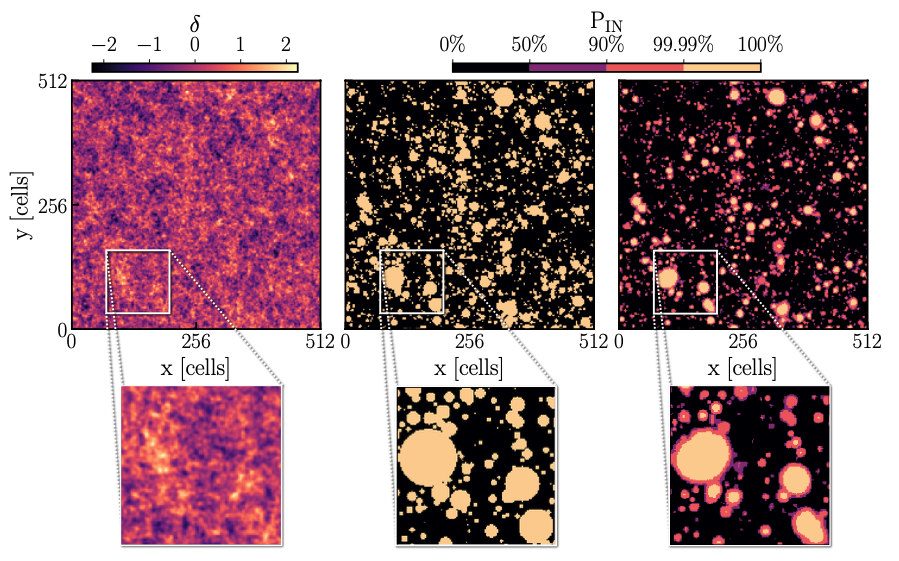

Since 2018 I have curated a (now popular) comprehensive public archive of ML applications to the study of cosmology in order to help scientists and domain experts identify AI solutions applicable to novel problems and rapidly implement them. Take a look to see whats been going on in the field or to design your own project!

github.com/georgestein/ml-in-cosmology